In Augmented Reality and Virtual Reality News

July 16, 2021 – Facebook Reality Labs (FRL), Facebook’s Augmented and Virtual Reality (AR/VR) research team, is celebrating new results published by its University of California San Francisco (UCSF) research collaborators in The New England Journal of Medicine, that demonstrate how someone with severe speech loss has been able to type out what they wanted to say almost instantly, simply by attempting to speak. In other words, UCSF has restored a person’s ability to communicate by decoding brain signals sent from the motor cortex to the vocal tract. FRL stated that the study marks an important milestone for the field of neuroscience, and it concludes Facebook’s years-long collaboration with UCSF’s Chang Lab.

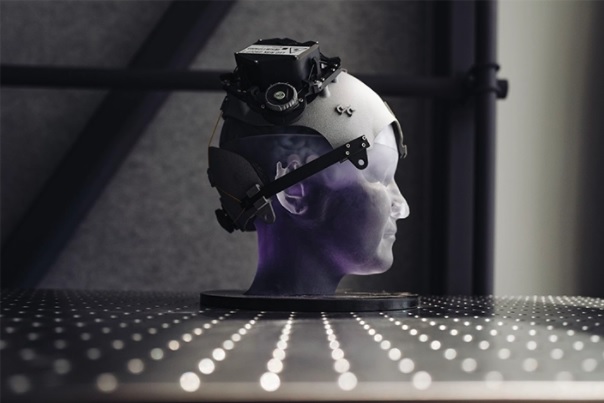

Established in 2017, Facebook Reality Labs’ (FRL) Brain-Computer Interface (BCI) project began with an ambitious long-term goal: to develop a silent, non-invasive speech interface that would let people type just by imagining the words they want to say.

The FRL team has made progress on this mission over the course of four years, investing in the exploration of head-mounted optical BCI as a potential input method for the next computing platform — essentially, a way to communicate in AR/VR with the speed of voice and the discreteness of typing.

However, in a lengthy blog post, FRL stated that Facebook has no interest in developing products that require brain implanted electrodes, and that while it still believes in the long-term potential of head-mounted optical BCI technologies, it has now decided to focus its immediate efforts on a different neural interface approach that has a nearer-term path to market: wrist-based devices powered by electromyography (EMG).

FRL explained how EMG works: When you decide to move your hands and fingers, your brain sends signals down your arm via motor neurons, telling them to move in specific ways in order to perform actions like tapping or swiping. EMG can pick up and decode those signals at the wrist and translate them into digital commands for a device. In the near term, these signals will allow users to communicate with their devices with a degree of control that’s highly reliable, subtle, personalizable, and adaptable to many situations. The company added that as this area of research evolves, “EMG-based neural interfaces have the potential to dramatically expand the bandwidth with which we can communicate with our devices, opening up the possibility of things like high-speed typing.”

We’re still in the early stages of unlocking the potential of wrist-based electromyography, but we believe it will be the core input for AR glasses, and applying what we’ve learned about BCI will help us get there faster.”

FRL Research Director, Sean Keller, commented: “We’re developing more natural, intuitive ways to interact with always-available AR glasses so that we don’t have to choose between interacting with our device and the world around us. We’re still in the early stages of unlocking the potential of wrist-based electromyography, but we believe it will be the core input for AR glasses, and applying what we’ve learned about BCI will help us get there faster.”

Speech was the focus of FRL’s BCI research because it’s inherently high bandwidth — you can talk faster than you can type. But speech isn’t the only way to apply this research, according to FRL. BCI team’s foundational work can also be leveraged to enable intuitive wrist-based controls. As a result, FRL is no longer pursuing a research path to develop a silent, non-invasive speech interface, and is instead going to pursue new forms of intuitive control with EMG as it focuses on wrist-based input devices for AR/VR.

“As a team, we’ve realized that the biofeedback and real-time decoding algorithms we use for optical BCI research can accelerate what we can do with wrist-based EMG,” said FRL Neural Engineering Research Manager, Emily Mugler. “We really want you to be able to intuitively control our next-generation wristbands within the first few minutes of putting them on. In order to use a subtle control scheme with confidence, you need your device to give you feedback, to confirm it understands your goal… Applying these BCI research concepts to EMG can help wrist-based control feel intuitive and useful right from the start.”

FRL concluded by saying that it will continue sharing more as work progresses, and that later this year, it will share more about how haptic wearables will add another dimension to the next computing platform and will “enhance our ability to establish presence and learn new interaction paradigms.”

For more information on Facebook Reality Labs and its research into Augmented and Virtual Reality, click here.

Image credit: Facebook Reality Labs

About the author

Sam is the Founder and Managing Editor of Auganix. With a background in research and report writing, he has been covering XR industry news for the past seven years.