In Augmented Reality and Virtual Reality News

January 12, 2021 – MIT.nano, MIT’s advanced facility for nanoscience and nanotechnology, has announced that the MIT.nano Immersion Lab, MIT’s first open-access facility for augmented and virtual reality (AR/VR) and interacting with data, is now open and available to MIT students, faculty, researchers, and external users.

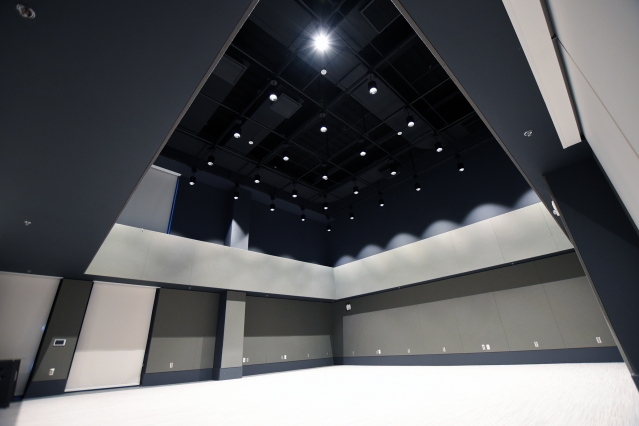

The Immersion Lab, which offers a powerful set of AR and VR capabilities, is located on the third floor of MIT.nano in a two-story space resembling a black-box theater. The lab contains embedded systems and individual equipment and platforms, as well as data capacity to support new modes of teaching and applications such as creating and experiencing immersive environments, human motion capture, 3D scanning for digital assets, 360-degree modeling of spaces, interactive computation and visualization, and interfacing of physical and digital worlds in real-time.

“Give the MIT community a unique set of tools and their relentless curiosity and penchant for experimentation is bound to create striking new paradigms and open new intellectual vistas. They will probably also invent new tools along the way,” said Vladimir Bulović, the founding Faculty Director of MIT.nano and the Fariborz Maseeh Chair in Emerging Technology. “We are excited to see what happens when students, faculty, and researchers from different disciplines start to connect and collaborate in the Immersion Lab — activating its virtual realms.”

According to MIT.nano, a major focus of the lab is to support data exploration, allowing scientists and engineers to analyze and visualize their research at the human scale with large, multidimensional views, enabling visual, haptic, and aural representations. “The facility offers a new and much-needed laboratory to individuals and programs grappling with how to wield, shape, present, and interact with data in innovative ways,” said Brian W. Anthony, the Associate Director of MIT.nano and faculty lead for the Immersion Lab.

Tools and capabilities

The Immersion Lab not only assembles a variety of advanced hardware and software tools, but is also an instrument in and of itself, said Anthony. The two-story cube, measuring approximately 28 feet on each side, is outfitted with an embedded OptiTrack system that enables precise motion capture via real-time active or passive 3D tracking of objects, as well as full-body motion analysis with the associated software.

Complementing the built-in systems are stand-alone instruments that study the data, analyze and model the physical world, and generate new, immersive content, including:

- A Matterport Pro2 photogrammetric camera to generate 3D, geographically and dimensionally accurate reconstructions of spaces (Matterport can also be used for augmented reality creation and tagging, virtual reality walkthroughs, and 3D models of the built environment);

- A Lenscloud system that uses 126 cameras and custom software to produce high-volume, 360-degree photogrammetric scans of human bodies or human-scale objects;

- Software and hardware tools for content generation and editing, such as 360-degree cameras, 3D animation software, and green screens;

- Backpack computers and VR headsets to allow researchers to test and interact with their digital assets in virtual spaces, untethered from a stationary desktop computer; and

- Hardware and software to visualize complex and multidimensional datasets, including HP Z8 data science workstations and Dell Alienware gaming workstations.

The Immersion Lab is open to researchers from any department, lab, and center at MIT, and is already supporting cross-disciplinary research at MIT. Expert research staff are also available to assist users.

Members of the MIT community and general public can learn more about the various application areas supported by the Immersion Lab through a new seminar series titled ‘Immersed’ beginning in February. According to MIT.nano, the monthly event will feature talks by experts in topical areas slated to include motion in sports, uses for photogrammetry, rehabilitation and prosthetics, and music/performing arts.

For full information on the MIT.nano Immersion Lab, please click here.

Image / video credit: MIT.nano / DeSant Productions / Vimeo

About the author

Sam is the Founder and Managing Editor of Auganix. With a background in research and report writing, he has been covering XR industry news for the past seven years.