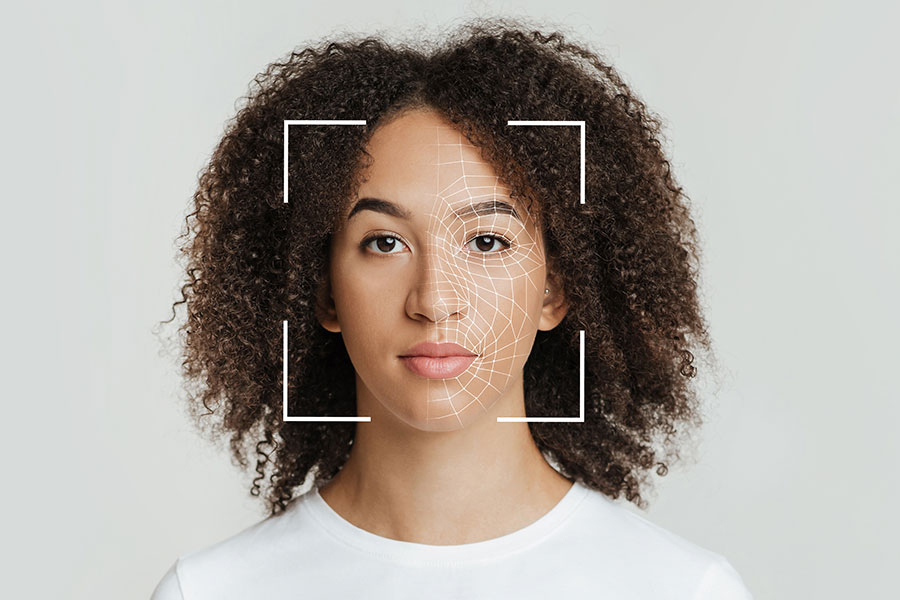

June 21, 2022 – Researchers at MIT have recently announced that they have trained an artificial neural network to process information for facial emotion recognition, in order to aid people with Autism to better recognize emotions expressed on people’s faces.

For many people it is easy to recognize emotions expressed in others’ faces. A smile may mean happiness, while a frown may indicate anger. Autistic people on the other hand can often have a more difficult time with this task. It is unclear why, but new research, published June 15 in The Journal of Neuroscience, sheds light on the inner workings of the brain to suggest an answer, and it does so using artificial intelligence (AI) to model the computation in our heads.

MIT research scientist Kohitij Kar, who works in the lab of MIT Professor James DiCarlo, is hoping to zero in on the answer. (DiCarlo, the Peter de Florez Professor in the Department of Brain and Cognitive Sciences, is a member of the McGovern Institute for Brain Research and director of MIT’s Quest for Intelligence.)

Kar began by looking at data provided by two other researchers: Shuo Wang at Washington University in St. Louis and Ralph Adolphs at Caltech. In one experiment, they showed images of faces to autistic adults and to neurotypical controls. The images had been generated by software to vary on a spectrum from fearful to happy, and the participants judged, quickly, whether the faces depicted happiness. Compared with controls, autistic adults required higher levels of happiness in the faces to report them as happy.

Kar, who is also a member of the Center for Brains, Minds and Machines, trained an artificial neural network, a complex mathematical function inspired by the brain’s architecture, to carry out this same task of determining whether the images of faces were happy. Kar’s research suggested that sensory neural connections in autistic adults might be “noisy” or inefficient. For a more thorough breakdown of what Kar’s research consisted of, read the full MIT blog post here.

So, where does something like augmented reality (AR) tie into all of this? Well, based on Kar’s research, he believes that these computational models of visual processing may have several uses in the future.

“I think facial emotion recognition is just the tip of the iceberg,” said Kar, who believes that these models of visual processing could also be used to select or even generate diagnostic content. For example, artificial intelligence could be used to generate content (such as movies and educational materials) that optimally engages autistic children and adults.

Based on the research, these computational models could potentially be used to help tweak facial and other relevant pixels in augmented reality glasses in order to change what autistic people see, perhaps by way of exaggerating levels of happiness (or other emotions) in people’s faces in order to help people with autism to better recognize emotions. According to MIT, work around augmented reality is something that Kar plans to pursue in the future.

Even in a more simplistic format, AR glasses with facial emotion recognition software installed could be able to detect people’s emotions and overlay text prompts to assist autistic people wearing the glasses, in order to give them descriptions of the likely emotional state of the people they are interacting with. Ultimately, the work helps to validate the usefulness of computational models, especially image-processing neural networks, according to Kar.

To read the full MIT blog post, click here.

Image credit: MIT

About the author

Sam is the Founder and Managing Editor of Auganix. With a background in research and report writing, he has been covering XR industry news for the past seven years.