May 8, 2019 – Google has announced at its annual I/O developer conference new features in Google Search and Google Lens that use the camera, computer vision and augmented reality (AR) to overlay information and content onto the user’s physical surroundings.

May 8, 2019 – Google has announced at its annual I/O developer conference new features in Google Search and Google Lens that use the camera, computer vision and augmented reality (AR) to overlay information and content onto the user’s physical surroundings.

AR in Google Search

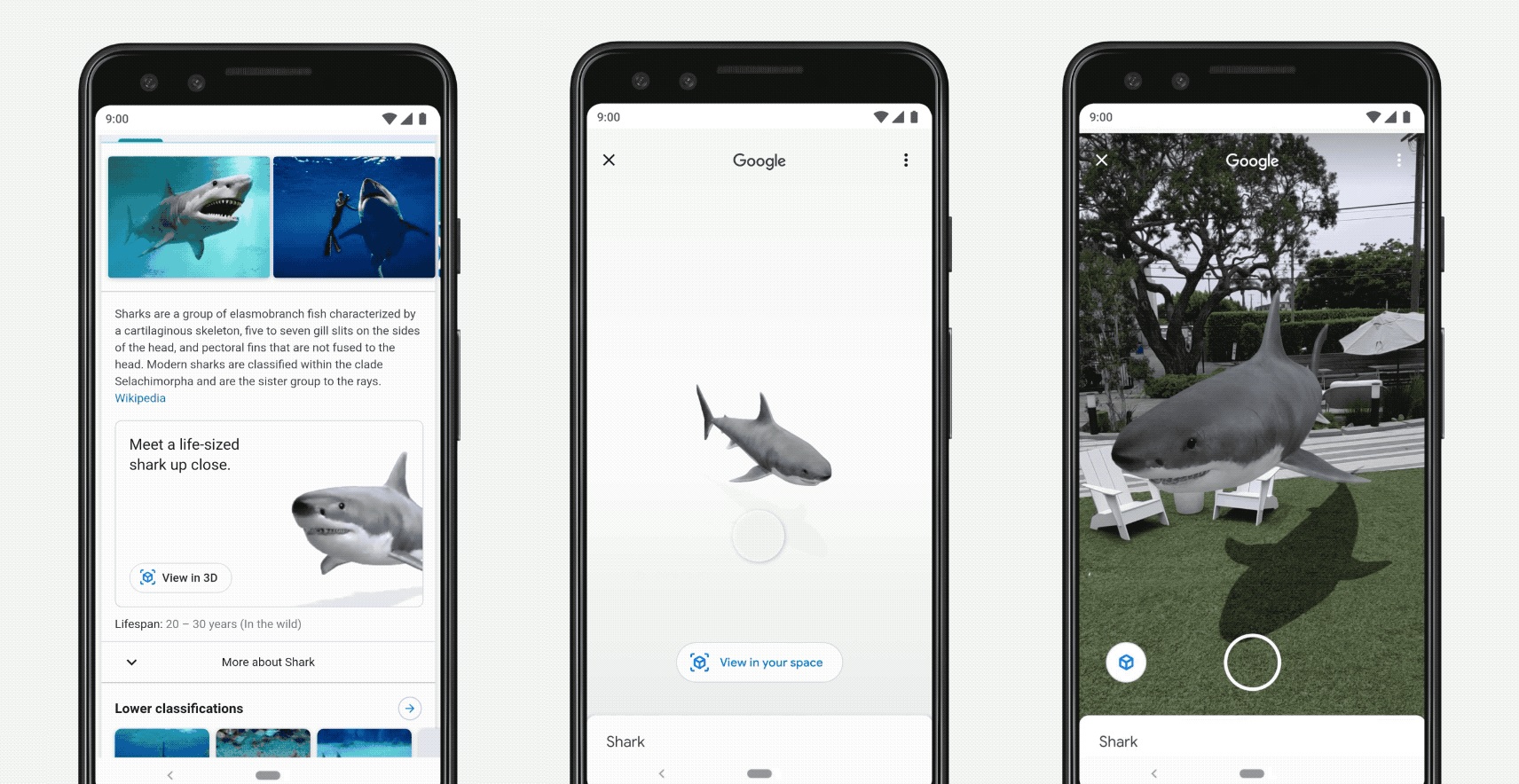

Google has stated that there are to be some new AR features in Search rolling out later this month. Users will be able to view and interact with 3D objects right from Search and place them directly into their immediate space in order to provide a sense of scale and detail. The company stated in a blog post: “It’s one thing to read that a great white shark can be 18 feet long. It’s another to see it up close in relation to the things around you. So when you search for select animals, you’ll get an option right in the Knowledge Panel to view them in 3D and AR.”

The company has also said that it is working with partners like NASA, New Balance, Samsung, Target, Visible Body, Volvo, Wayfair and others in order to surface their content in Search. This means that users will eventually be able to interact with a range of 3D models and put them into the real world from Google Search.

New features in Google Lens

Google Lens taps into machine learning, computer vision and tens of billions of facts in the Knowledge Graph to answer questions for users. Google states that it is now evolving Lens to provide more visual answers to visual questions, and uses the example of ordering food at a restaurant:

“Lens can automatically highlight which dishes are popular, right on the physical menu. When you tap on a dish, you can see what it actually looks like and what people are saying about it, thanks to photos and reviews from Google Maps.”

In order to do this, Lens first has to identify all the dishes on the menu, looking for things like the font, style, size and color to differentiate dishes from descriptions. Next, it matches the dish names with the relevant photos and reviews for that restaurant in Google Maps.

The company has stated that users can also point their camera at foreign text – Lens will then automatically detect the language and overlay the translation right on top of the original words, in more than 100 languages.

Google is also working with other organizations to connect helpful digital information to things in the physical world. One example beginning next month is with the de Young Museum in San Francisco, where users will be able to utilize Lens to see hidden stories about paintings, directly from the museum’s curators. Another being in upcoming issues of Bon Appetit magazine, users will be able to point their camera at a recipe and have the page come to life and show them how to make it.

Bringing Lens to Google Go

In addition to the above, Google is introducing new features to assist those who have difficulty reading, who will now be able to point the camera at text in order for Lens to read it out loud. The software highlights the words as they are spoken, so that users can follow along and understand the full context of what they are seeing. They can also tap on a specific word to search for it and learn its definition. This feature is launching first in Google Go, our Search app for first-time smartphone users. Lens in Google Go is just over 100KB and works on phones that cost less than $50.

Image credit: Google

About the author

Sam is the Founder and Managing Editor of Auganix. With a background in research and report writing, he has been covering XR industry news for the past seven years.