January 23, 2021 – The US Army Combat Capabilities Development Command (DEVCOM), Army Research Laboratory (ARL), has recently announced that it is employing the use of augmented reality (AR) overlays in its research for the detection of roadside explosive hazards, such as improvised explosive devices (IEDs), unexploded ordnance and landmines.

Route reconnaissance in support of convoy operations remains a critical function to keep Soldiers safe from such hazards, which continue to threaten operations abroad and continually prove to be an evolving and problematic adversarial tactic. To combat this problem, ARL and other research collaborators were funded by the Defense Threat Reduction Agency, via the ‘Blood Hound Gang Program’, which focuses on a system-of-systems approach to standoff explosive hazard detection.

Kelly Sherbondy, Program Manager at the lab, said “Logically, a system-of-systems approach to standoff explosive hazard detection research is warranted going forward,” adding, “Our collaborative methodology affords implementation of state-of-the-art technology and approaches while rapidly progressing the program with seasoned subject matter experts to meet or exceed military requirements and transition points.”

The program has seven external collaborators from across the country, which include the US Military Academy, The University of Delaware Video/Image Modeling and Synthesis Laboratory, Ideal Innovations Inc., Alion Science and Technology, The Citadel, IMSAR and AUGMNTR.

In Phase I of the program, researchers took 15-months to evaluate mostly high-technology readiness level (TRL) standoff detection technologies against a variety of explosive hazard emplacements. In addition, a lower-TRL standoff detection sensor, which was focused on the detection of explosive hazard triggering devices, was developed and assessed. According to the Army, the Phase I assessment included probability of detection, false alarm rate and other important information that will ultimately lead to a down-selection of sensors based on best performance for Phase II of the program.

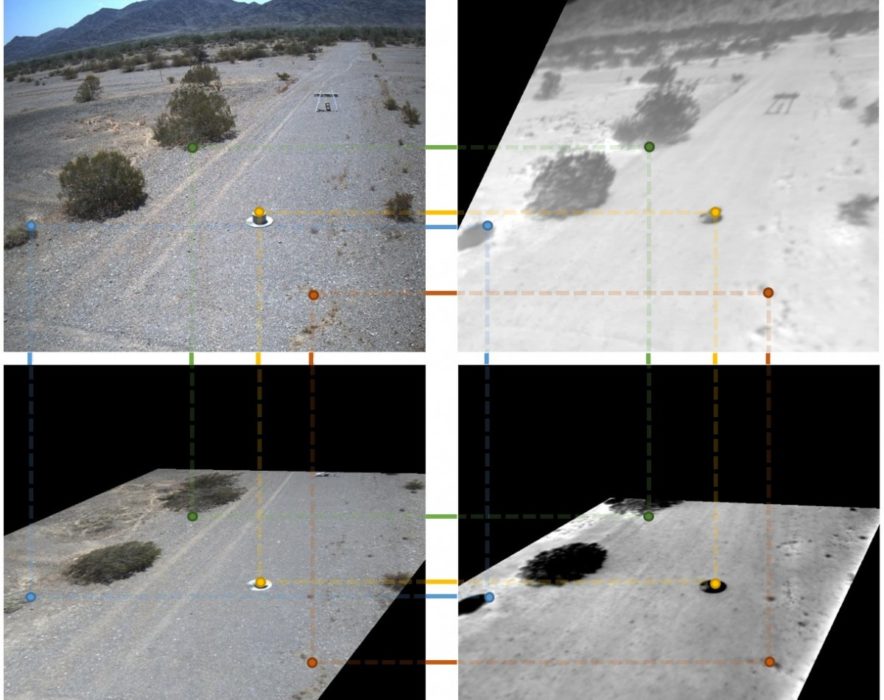

The sensors evaluated during Phase I included an airborne synthetic aperture radar, ground vehicular and small unmanned aerial vehicle LIDAR, high-definition electro-optical cameras, long-wave infrared cameras and a non-linear junction detection radar. Researchers carried a field test in real-world representative terrain over a 7-kilometer test track and included a total of 625 emplacements including a variety of explosive hazards, simulated clutter and calibration targets. They collected data before and after emplacement to simulate a real-world change between sensor passes.

Terabytes of data was collected across the sensor sets which was needed to adequately train artificial intelligence/machine learning (AI/ML) algorithms. The algorithms subsequently performed autonomous automatic target detection for each sensor. The Army stated that this sensor data is pixel-aligned via geo-referencing and the AI/ML techniques can be applied to some or all of the combined sensor data for a specific area. Furthermore, the detection algorithms are able to provide ‘confidence levels’ for each suspected target, which is displayed to a user as an augmented reality overlay. The detection algorithms were executed with various sensor permutations so that performance results could be aggregated and determine the best course of action moving forward into Phase II.

“The accomplishments of these efforts are significant to ensuring the safety of the warfighter in the current operation environment,” said Lt. Col. Mike Fuller, US Air Force Explosive Ordnance Disposal and DTRA Program Manager.

The Army noted that future research into the technology will enable real-time automatic target detection displayed with an augmented reality engine. The three year effort will ultimately culminate with demonstrations at multiple testing facilities to show the technology’s robustness over varying terrain.

“We have side-by-side comparisons of multiple modalities against a wide variety of realistic, relevant target threats, plus an evaluation of the fusion of those sensors’ output to determine the most effective way to maximize probability of detection and minimize false alarms,” Fuller said. “We hope that the Army and the Joint community will both benefit from the data gathered and lessons learned by all involved.”

Image credit: US Army

About the author

Sam is the Founder and Managing Editor of Auganix. With a background in research and report writing, he has been covering XR industry news for the past seven years.