![]()

In Augmented Reality, Virtual Reality and Mixed Reality News

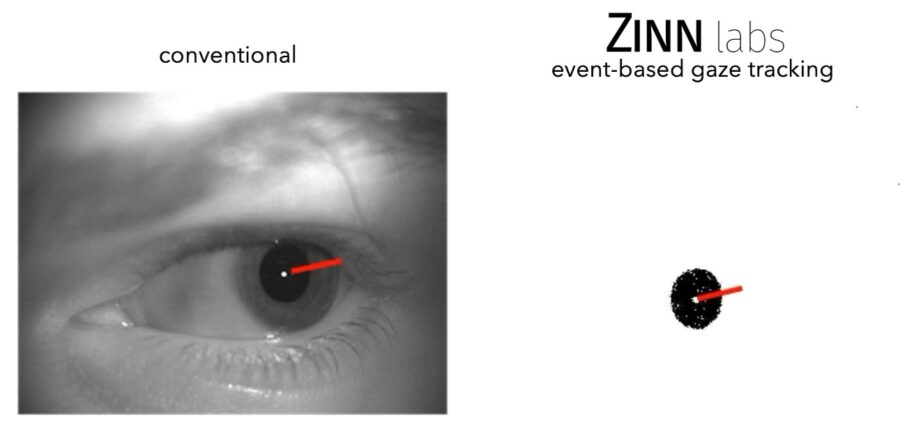

January 31, 2024 – Zinn Labs, a provider of pupil and gaze tracking systems for head-worn devices, has announced the introduction of its event-based gaze-tracking system for augmented, virtual and mixed reality (AR/VR/MR) headsets and smart frames.

According to Zinn Labs, the core enabling feature of event-based sensing is the faster and more efficient capture of the relevant signals for eye tracking. As XR computing devices move toward increasingly more intuitive user interfaces, gaze tracking is a prerequisite for several component technologies.

With XR likely to serve as the interface for artificial intelligence (AI) in the coming years, smart eyewear is becoming a rapidly emerging category, offering a portal through which users can access the benefits of AI. The attention signal provided by gaze can seamlessly connect an AI output to the user’s intentions, since gaze is the most natural method of interacting with the digital environment, according to Zinn Labs.

Kevin Boyle, CEO of Zinn Labs, explained: “Zinn Labs’ event-based gaze-tracking reduces bandwidth by two orders of magnitude compared to video-based solutions, allowing it to scale to previously impractical applications and form factors.”

The low compute footprint of Zinn Labs’ 3D gaze estimation gives it the flexibility to support low-power modes for use in smart wearables that look like normal eyewear. The company added that in other configurations, the gaze tracker can enable low-latency, slippage-robust tracking at speeds above 120 Hz for high-performance applications.

To obtain these high tracking speeds, Zinn Labs leverages the Prophesee GenX320 sensor to offer a high-refresh-rate and low-latency gaze-tracking solution.

Zinn Labs DK1 available soon

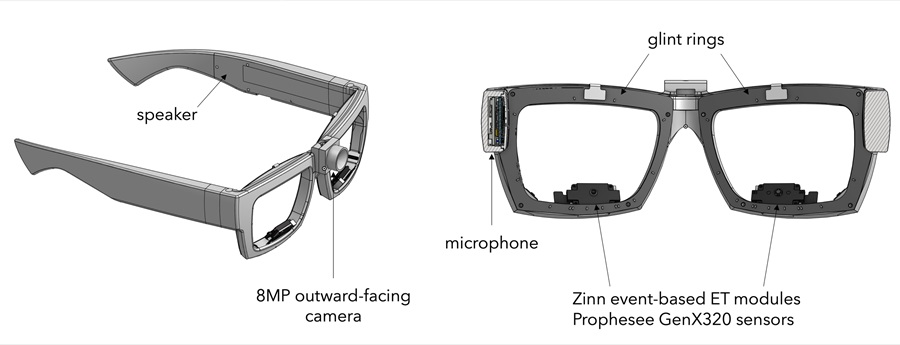

The Zinn Labs DK1 Gaze-enabled Eyewear Platform is a development kit equipped with a binocular event-based gaze-tracking system and outward-facing world camera and is powered by Zinn Labs’ 3D gaze estimation algorithms. The DK1 is equipped with Prophesee GenX320 event-based Metavision sensors, the smallest event-based sensors on the market according to Zinn Labs.

“Frame-based acquisition techniques don’t meet the speed, low latency, size and power requirements of AR/MR wearables and headsets that are practical to use and provide a realistic user experience,” said Luca Verre, CEO and co-founder of Prophesee. “Our GenX320 was developed specifically with these real-time and processing efficiency needs in mind, delivering the advantages of event-based vision to a wide range of products, such as those that will be enabled by Zinn Labs’ breakthrough AR/MR platform.”

Zinn Labs added that its DK1 supports sub-1°, slippage-robust gaze tracking accuracy and runs at 120 Hz with 10ms end-to-end latency. The development kit also comes with demo applications, including a wearable AI assistant (shown above), and can be used for performance evaluation or customer proof-of-concept integration projects.

The company will be presenting its technology and demonstrating the DK1 at SPIE AR | VR | MR 2024 this week in San Francisco, CA. For more information on Zinn Labs and its gaze tracking solutions for XR devices, click here.

Image / video credit: Zinn Labs / Prophesee / YouTube

About the author

Sam is the Founder and Managing Editor of Auganix. With a background in research and report writing, he has been covering XR industry news for the past seven years.